Tools to Assess Regional Anesthesia Skills Within a Competency-Based Curriculum

Cite as: Chuan A, McLeod G. Tools to assess regional anesthesia skills within a competency-based curriculum. ASRA Pain Medicine News 2022;47. https://doi.org/10.52211/asra080122.034

Introduction

Competency-based medical education (CBME) applies a holistic model of training that strives to produce graduates who can demonstrate key capabilities deemed essential to deliver high-quality regional anesthesia (RA) skills. These outcomes include knowledge, procedural proficiency, safety, quality, communication, and professionalism.

Measurements of performance, otherwise termed metrics, should be quantifiable and unambiguous to reduce bias.

A key aspect of CBME is programmed assessment to demonstrate competency in these outcomes; the United Kingdom General Medical Council1 describes it as: “a systematic procedure for measuring a trainee’s progress or level of achievement, against defined criteria (in order) to make a judgement…” In this article, we describe the evidence-based assessment tools that are being used to assess RA.

Categories of Assessment Tools

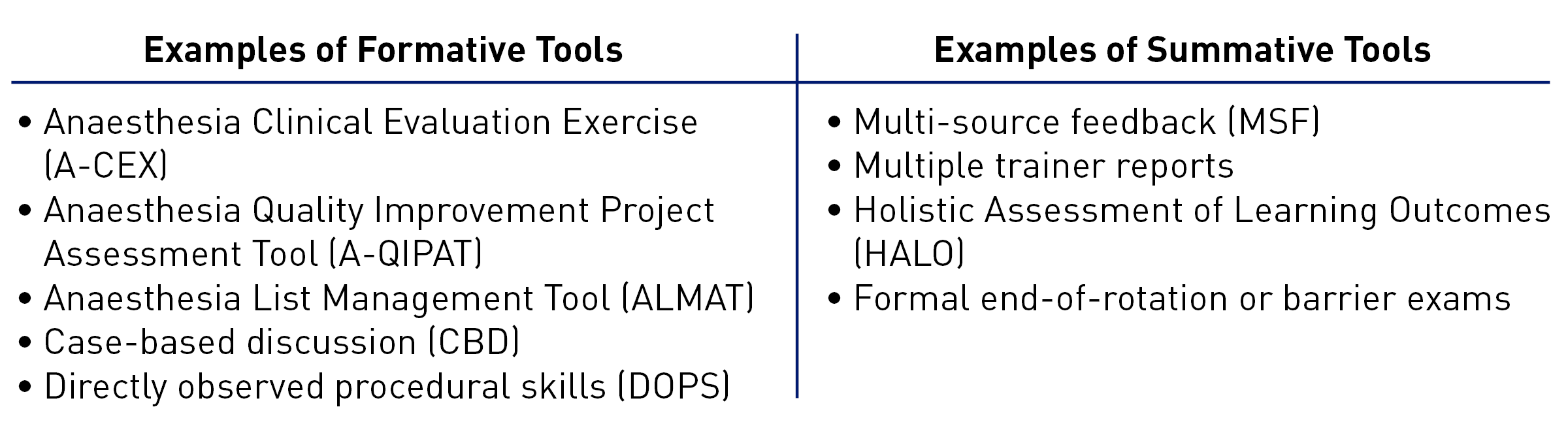

Assessment tools can be broadly divided into formative (assessment for learning, emphasizing feedback, learner growth, and self-reflection) and summative (assessment of learning, evaluating the level of competency) tools (Figure 1). Formative tools come under the umbrella of supervised learning events (SLE), with a portfolio of SLEs showing evidence of progression in undertaking RA procedures of increasing complexity. Summative tools provide snapshots of performance at the time of assessment.

Figure 1. Real-life examples of formative and summative tools used in assessment of anesthesiology residents and trainees.

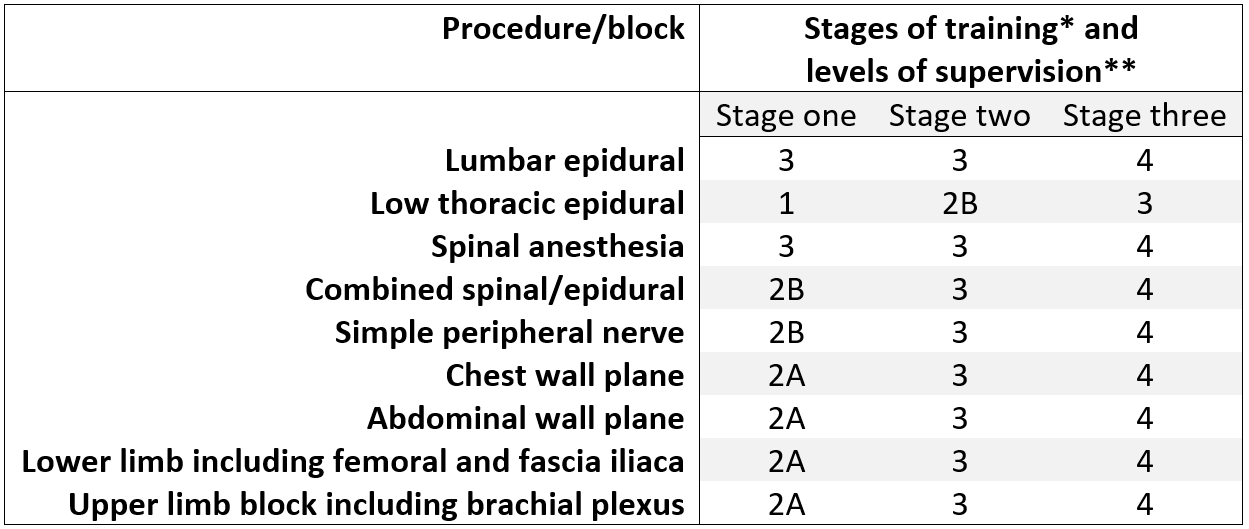

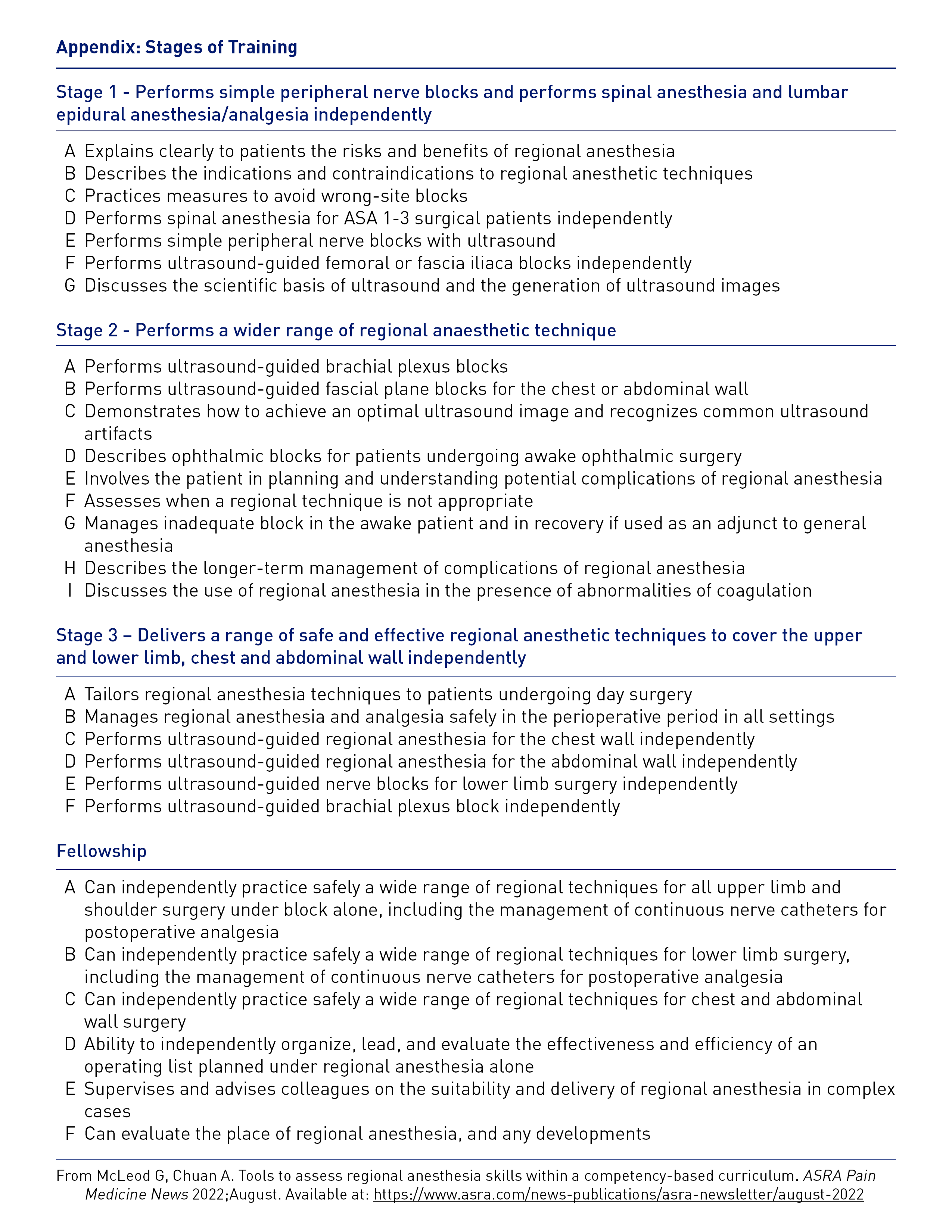

Clinical competency in an RA procedure also may be assessed by the level of entrustment, measured by the appropriate supervision level. This holistic assessment takes into account multiple competencies, seniority of the trainee, and complexity of the task. An example from the United Kingdom describes a matrix of level of supervision expected at each stage of RA training (Table 1). More recently, formal descriptions of entrustable professional activities (EPA) that include RA tasks have been proposed.2

Table 1. Stages of training and expected levels of supervision of anesthesiology trainees by consultants in the United Kingdom curriculum.

* Increasing trainee seniority from Stages 1 to 3; a full description of stages is included in Appendix

** Levels of supervision. 1: Direct supervisor involvement, physically present in operating room; 2A: Supervisor in operating suite, available to guide aspects of activity through monitoring at regular intervals; 2B: Supervision within hospital for queries, able to prompt direction/assistance; 3: Supervisor on call from home for queries able to provide directions via phone or non-immediate attendance; 4: Should be able to manage independently with no supervisor involvement (although should inform consultant supervisor as per local protocols)

Note: In the UK and Australian health system, suitably senior trainees may be supervised by consultants who are not on-site in the hospital.

Specific Assessment Tools

Assessments designed to measure RA skills should capture the essence of individual performance. Measurements of performance, otherwise termed metrics, should be quantifiable and unambiguous to reduce bias.

Checklists

Checklists are developed using an iterative Delphi process whereby a large number of expert regional anesthesiologists identify: (i) essential steps (interventions) before and during nerve block that reflect a high level of clinical practice; and (ii) pinpoint errors (including sentinel errors3 representative of serious adverse clinical events that that must be avoided). Checklists have been used in simulation4 and clinical settings.3,5 They may focus on specific blocks such as axillary block,3 multiple blocks,5 multiple blocks using a range of nerve targeting modalities,6 or translation from cadavers to patients.4

Checklists can be used to generate learning curves from repeated practice, provide detailed formative feedback, and formally test performance after training. The scanning and needling phases of the procedure should be recorded onto the hard disk of the ultrasound machine and recorded externally by cameras linked to computers.

Assessment uses either binary3,4 or ordinal Likert scales.6 Performance is quantified as the cumulative score of all items, provided that error items are scored inversely. However, raters need considerable training and inter-rater agreement of greater than 80% on testing before assessment of performance. Reliability of testing increases with multiple raters and time points.

Key Performance Indicators

Procedure duration does not necessarily correlate with performance, albeit skilled anesthesiologists are much more likely to perform blocks well on the first needle pass and minimize tissue trauma. Key performance indicators (KPIs) can provide an objective assessment of combined speed and accuracy by weighting the degree of importance of each item (from prior Delphi scores) and degree of difficulty of each checklist item. The rate correct score (RCS) is calculated by dividing the KPI by time, thus providing a marker of efficiency that balances the quality of the intervention against the time spent.7

Global Rating Scores

The global rating score (GRS) is a subjective 5-item Likert scale, initially used to assess surgical performance, that provides information on a range of non-technical skills and professionalism. It correlates with checklists and shows good validity for simulated axillary3 and interscalene4 blocks. Checklists and GRS scores provide a comprehensive picture of technical and non-technical skills.6

Psychometric Tests

Trainees may adapt their behavior during training and testing to align to prior personal and group expectations. Dynamic self-confidence scores have been proposed as means of assessing performance. However, evidence from prospective cadaver-based simulation studies investigating anesthesiology trainees and medical students failed to show any relationship between self-confidence and self-rating of performance on either checklist or GRS scores.7,8 Self-rating scores rose in all participants irrespective of performance level.

Visuospatial and psychomotor skills are both needed to perform RA well. Studies investigating the performance of medical students9 and anesthesiology trainees10,11 indicate that visuo-spatial skills are more important. For example, the Mental Rotation Test-A discriminated between the ultrasound-guided needling skills of medical students on a turkey model9 and between the brachial plexus scanning skills of anesthesia trainees.11

Gamification has been proposed as a means of improving visuospatial ability. Shaftaq et al. investigated this hypothesis by comparing the scanning and needling performance of medical students with variable exposure to video games. Gamers were characterized by high visuo-spatial ability and had higher skills levels on a GRS scale.12 In a subsequent randomized controlled study,13 volunteers with no prior experience of ultrasound-guided regional anesthesia and established low baseline mental rotation ability were randomized to mental rotation training or not. Training consisted of the manipulation and rotation of 3D models. Performance of a simulated needling task was better in the training group with fewer errors.

Other Tests Used in Clinical Practice

Direct observation of procedural skills (DOPS) is a workplace-based assessment tool that uses a Likert scale, not unlike the GRS score, and is subject to the same constraints as all subjective multiresponse rating scales. As such, internal reliability or consistency was rated as good, but inter-rater reliability was insufficient to be used as a formative feedback tool.14

Cumulative sum scoring (CUSUM) has captured the imagination of anesthesiologists but relies on a nonstandarized binary outcome and does not measure the quality of the preceding clinical intervention. Thus, given the high reliability of checklist scoring and its application to indices of key performance, CUSUM should not be used for assessment.15

The Future of Assessments?

Procedural time, as well as movement and path length can be measured in 3D space using electromagnet inertial measurement devices continuously tracking motion of digits and arms.16 Application to supraclavicular block demonstrated both construct validity between novices and experts and concurrent validity (correlation) between metrics.17 Two studies investigating the effect of piezo trackers at the distal tip of block needles showed reduced procedural time for out-of-plane insertions18 and reduction in hand movements18,19 and path lengths.19

Another form of body tracking includes eye gaze tracking, which provides detailed quantitative real-time data about cognitive attentional processes, what users are looking at, and what they may be thinking. Commercial eye trackers offer qualitative eye gaze “color heat-maps,” and qualitative measures of eye gaze fixations, the period during which cognitive attention is relatively stable at a given location; saccades, the rapid motion of the eye from one fixation to another; and glances, the number of saccade visits to the area of interest. However, analysis is laborious and requires a trained psychologist. Research algorithms, on the other hand, offer real-time feedback and learning curves.7

Other future digital teaching technologies include 360-degree video, augmented reality, and virtual reality. The latter two provide holograms in the field of view of operators that either maintain contact with the real world or immerse individuals within a 3D interactive world. No assessment tools have yet been validated for such technologies.

Conclusion

In conclusion, checklists of steps and errors are the most reliable form of assessment. However, video raters must be trained and show inter-rater agreement of at least 80% before assessment of ultrasound and external videos can be valid and meaningful.

Graeme McLeod, MBChB, MD, is an honorary professor at Ninewells Hospital at the University of Dundee in Dundee, United Kingdom. Dr. McLeod is a clinical advisor to Optomize Ltd., Glasgow, and Xavier Bionix Ltd., Dundee.

Alwin Chuan, MBBS, PhD, is an associate professor at Liverpool Hospital, University of New South Wales, in Sydney, Australia.

References

- Excellence by Design: Standards for Postgraduate Curricula. Manchester, United Kingdom: The General Medical Council. Available at: www.gmc-uk.org/education/postgraduate/standards_for_curricula.asp. Accessed June 27, 2022.

- Woodworth GE, Marty AP, Tanaka PP, et al. Development and pilot testing of entrustable professional activities for us anesthesiology residency training. Anesth Analg 2021;132:1579-91. https://doi.org/10.1213/ane.0000000000005434

- Ahmed OM, O'Donnell BD, Gallagher AG, et al. Construct validity of a novel assessment tool for ultrasound-guided axillary brachial plexus block. Anaesthesia 2016;71:1324-31. https://doi.org/10.1111/anae.13572

- McLeod G, McKendrick M, Taylor A, et al. Validity and reliability of metrics for translation of regional anaesthesia performance from cadavers to patients. Br J Anaesth 2019;123:368-77. https://doi.org/10.1016/j.bja.2019.04.060

- Cheung JJ, Chen EW, Darani R, et al. The creation of an objective assessment tool for ultrasound-guided regional anesthesia using the delphi method. Reg Anesth Pain Med 2012;37:329-33. https://doi.org/10.1097/AAP.0b013e318246f63c

- Chuan A, Graham PL, Wong DM, et al. Design and validation of the regional anaesthesia procedural skills assessment tool. Anaesthesia 2015;70:1401-11. https://doi.org/10.1111/anae.13266

- McKendrick M, Sadler A, Taylor A, et al. The effect of an ultrasound-activated needle tip tracker needle on the performance of sciatic nerve block on a soft embalmed thiel cadaver. Anaesthesia 2021;76:209-17. https://doi.org/10.1111/anae.15211

- McLeod GA, McKendrick M, Taylor A, et al. An initial evaluation of the effect of a novel regional block needle with tip-tracking technology on the novice performance of cadaveric ultrasound-guided sciatic nerve block. Anaesthesia 2020;75:80-8. https://doi.org/10.1111/anae.14851

- Shafqat A, Ferguson E, Thanawala V, et al. Visuospatial ability as a predictor of novice performance in ultrasound-guided regional anesthesia. Anesthesiology 2015;123:1188-97. https://doi.org/10.1097/SA.0000000000000221

- Smith HM, Kopp SL, Johnson RL, et al. Looking into learning: Visuospatial and psychomotor predictors of ultrasound-guided procedural performance. Reg Anesth Pain Med 2012;37:441-7. https://doi.org/10.1097/ALN.0000000000000870

- Duce NA, Gillett L, Descallar J, et al. Visuospatial ability and novice brachial plexus sonography performance. Acta Anaesthesiol Scand 2016;60:1161-9. https://doi.org/10.1111/aas.12757

- Shafqat A, Mukarram S, Bedforth NM, et al. Impact of video games on ultrasound-guided regional anesthesia skills. Reg Anesth Pain Med 2020;45:860-5. http://dx.doi.org/10.1136/rapm-2020-101641

- Hewson DW, Knudsen R, Shanmuganathan S, et al. Effect of mental rotation skills training on ultrasound-guided regional anaesthesia task performance by novice operators: a rater-blinded, randomised, controlled study. Br J Anaesth 2020;125:168-74. https://doi.org/10.1016/j.bja.2020.04.090

- Chuan A, Thillainathan S, Graham PL, et al. Reliability of the direct observation of procedural skills assessment tool for ultrasound-guided regional anaesthesia. Anaesth Intensive Care 2016;44:201-9. https://doi.org/10.1097/cj9.0000000000000158

- Starkie T, Drake EJ. Assessment of procedural skills training and performance in anesthesia using cumulative sum analysis (CUSUM). Can J Anaesth 2013. 60(12):1228. https://doi.org/10.1007/s12630-013-0045-1

- Kasine T, Romundstad L, Rosseland LA, et al. Ultrasonographic needle tip tracking for in-plane infraclavicular brachialis plexus blocks: a randomized controlled volunteer study. Reg Anesth Pain Med 2020;45:634-9. https://doi.org/10.1136/rapm-2020-101349

- Chin KJ, Tse C, Chan V, et al. Hand motion analysis using the imperial college surgical assessment device: validation of a novel and objective performance measure in ultrasound-guided peripheral nerve blockade. Reg Anesth Pain Med 2011;36:213-9. http://dx.doi.org/10.1097/AAP.0b013e31820d4305

- Kasine T, Romundstad L, Rosseland LA, et al. Needle tip tracking for ultrasound-guided peripheral nerve block procedures-an observer blinded, randomised, controlled, crossover study on a phantom model. Acta Anaesthesiol Scand 2019;63:1055-62. https://doi.org/10.1111/aas.13379

- Kasine T, Romundstad L, Rosseland LA, et al. The effect of needle tip tracking on procedural time of ultrasound-guided lumbar plexus block: a randomised controlled trial. Anaesthesia 2020;75:72-9. https://doi.org/10.1111/anae.14846